If you’re a creative pro, chances are you’ve heard about the recent controversy regarding the update on Adobe AI Terms of Service. In case you've been off the grid, here's the TL;DR version: Adobe sent a notice informing users that it could access their content via manual and automated methods.

This caused a major stir, with many worrying that Adobe might use its users' work to train its AI models.

Ever tried generating trust with Artificial Intelligence? Spoiler alert: it doesn't work (yet).

With the massive rise in popularity of generative AI tools, tech companies are rushing to exploit the bubble and maximise their profits. In doing so, they seem to be leaving safety and common sense behind.

Have they not learned anything from the current state of social media?

Anyway…

Adobe AI Terms of Service, in a Nutshell

Picture this: you're opening a project on Photoshop, Illustrator, or Premiere Pro to pick an unfinished work and meet a deadline. Suddenly, Adobe hits you with a new Terms & Conditions pop-up. Annoying, right? Most of you wouldn't even read it. Just click the button to get rid of it and go on with your task.

Well, these updated terms now allow Adobe to access your content for several reasons. Some of which left many users scratching their heads.

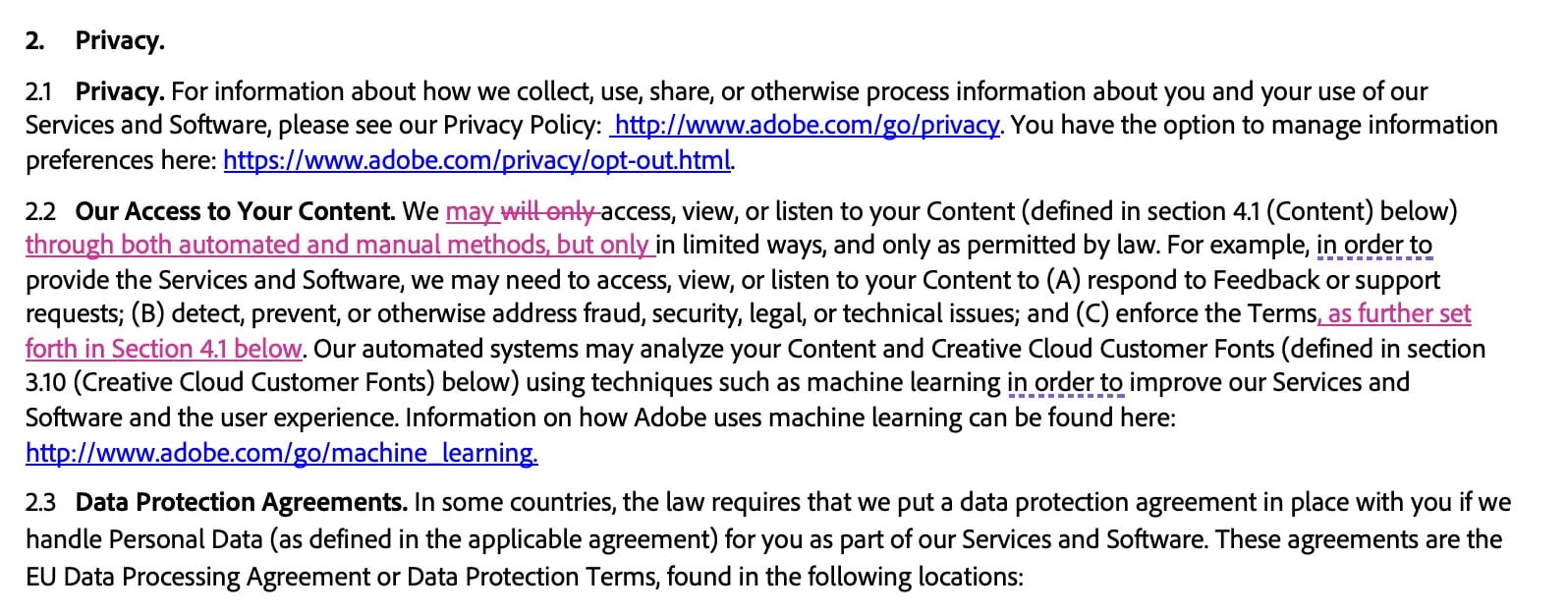

Adobe's AI ToS now state that they might “access, view, or listen” to your content. But only “in limited ways and as permitted by law“.

This includes reviewing feedback, handling support requests, preventing fraud, and resolving security issues. It also indicates that it has the right to remove content that violates Adobe's terms.

While some of this makes some sense, like helping with support, the part where Adobe says it can “analyse your Content and Customer Fonts […] using techniques such as machine learning to improve our Services and Software” raised eyebrows.

It sounds conveniently vague, a lot like, “We're watching everything you do to make our AI smarter“.

One user summed it up perfectly on social media: “Using machine learning for ‘content moderation' sounds like code for ‘we're installing spyware to train AI on everything you do'”.

Concerns have also been raised about violating NDAs and work-from-home contracts with clients.

And what if you don't want to accept these new terms? Some users are saying you can't even cancel your subscription without first agreeing.

Adobe's Clarification

Adobe has reassured everyone that the new Adobe AI Terms of Service don't give them access to files on your computer—only those in their cloud storage. Some users predicted years ago that the cloud would not be an entirely private space. They may have been right all along.

After a wave of backlash, Adobe tried to smooth things over, saying the policy isn't new but just worded for better transparency. The Creative Bloq published Adobe's clarification in a blog post:

“This policy has been in place for many years. As part of our commitment to being transparent with our customers, we added clarifying examples earlier this year to our Terms of Use regarding when Adobe may access user content. Adobe accesses user content for a number of reasons, including the ability to deliver some of our most innovative cloud-based features, such as Photoshop Neural Filters and Remove Background in Adobe Express, as well as to take action against prohibited content. Adobe does not access, view or listen to content that is stored locally on any user’s device”.

In a rare move, Adobe posted further clarification on social media and in a blog, acknowledging the flood of questions and aiming to clear things up. They provided a side-by-side comparison of the old and new terms, claiming minimal changes.

Adobe also clarified that their Firefly Gen AI models are not trained on customer content. They only use licensed content like Adobe Stock and public domain stuff.

However, some artists remain sceptical.

Scott Belsky, Adobe’s Chief Strategy Officer, admitted, “Trust and transparency couldn’t be more crucial these days, and we need to be clear when it comes to summarising terms of service in these pop-ups.”

Trust

And he's absolutely right. In a world where AI can potentially mimic human creativity, users must feel confident that their work is respected and protected. Unclear terms of service or ambiguous language can quickly erode that trust, leaving creators feeling vulnerable and exploited.

And there it is—the crux of the problem. As Artificial Intelligence becomes more ingrained in our lives and harder to detect, trust becomes paramount. Adobe’s current standing in the trust department isn’t great, partly due to such controversial policies and internal discontent with their AI strategies.

But Adobe's not the only one facing the music. From Instagram to Disney, several big names have had to clarify or even apologise for their use of AI. And we know that Apple will finally dive into the AI pool in their next WWDC.

This tech is only going to get more prevalent. If these companies want to keep their users' confidence, they’ll need to double down on trust and transparency.

So, what's the takeaway for tech brands? Clear communication and trust-building aren't just nice-to-haves—they're essential. And trust ain't built with algorithms, folks

If you don't want to end up in Adobe's shoes, take note.